How We Helped a Construction Services Company Process Bids Twice as Fast

For companies in construction and property services, managing the constant flow of RFPs (Requests for Proposal) is a daily reality. When bid volumes spike, teams scramble. Decisions get rushed. Mistakes slip through.

We worked with a UK-based construction services company facing exactly this challenge. They were drowning in RFPs, relying on institutional knowledge locked inside people's heads, and occasionally accepting contracts that didn't align with their business criteria.

Here's how we used AI to fix it.

The Problem: Volume, Speed, and Inconsistency

Our client's operations team reviewed every incoming RFP manually. There was no formal checklist. Experienced staff mentally applied years of accumulated rules about what made a good bid versus a bad one. This worked when volumes were manageable, but during busy periods, review quality suffered.

The real issue only became clear later. Historical decisions weren't as consistent as the team believed. The same type of bid might be accepted one month and rejected the next, depending on who reviewed it and how busy they were.

Our Approach: Discovery First

Before writing any code, we ran workshops with the frontline team. These were the people actually reviewing bids every day. We used a user-centred discovery process to extract the implicit rules they'd developed over years of experience.

This gave us two things: a weighted set of decision criteria we could translate into a machine learning model, and a clear understanding of what "good" looked like from the people who knew best.

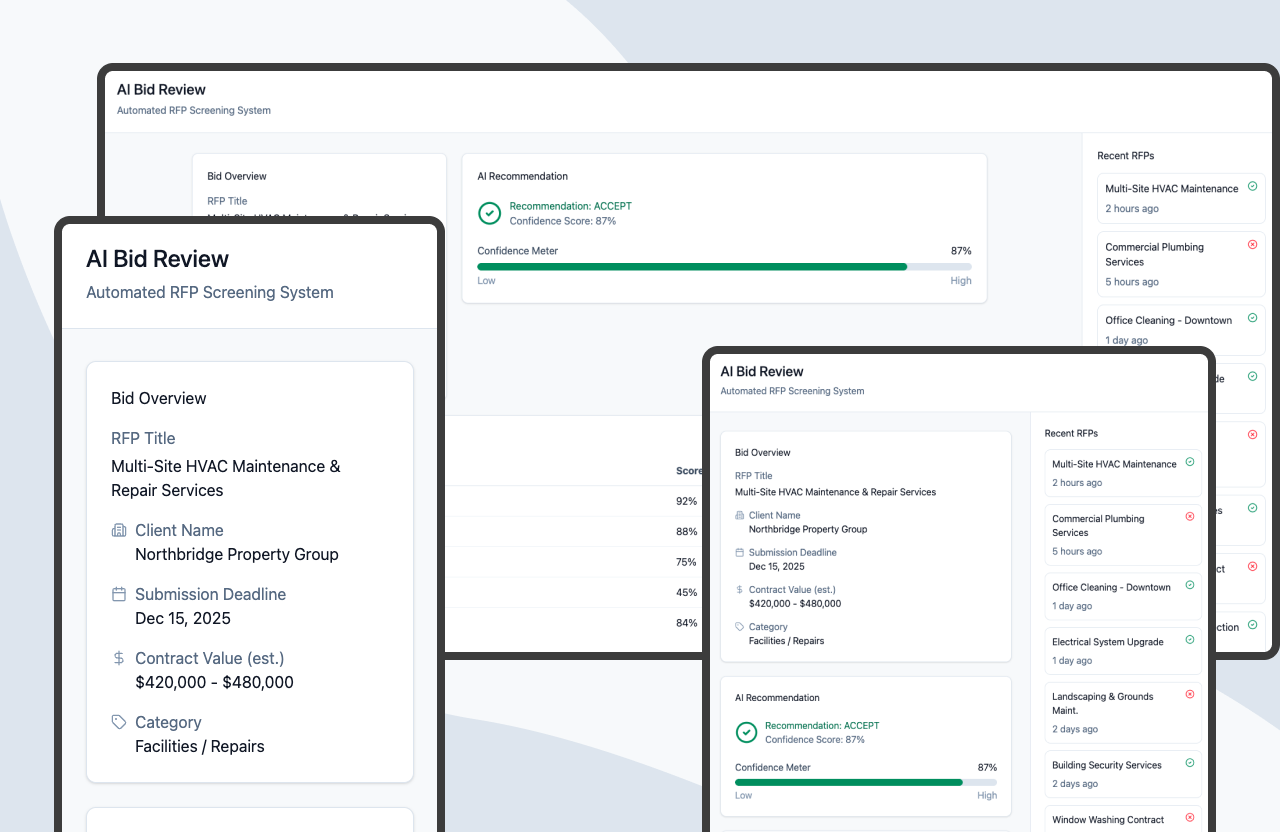

The Solution: ML Classification + LLM Insights

We built a hybrid AI system with two components working together.

The ML model handles classification. Built in Databricks using a random forest algorithm, it analyses weighted features extracted from our discovery workshops. For each RFP, it outputs an accept/decline recommendation with a confidence score.

The LLM layer provides context and explainability. Using a lightweight RAG (Retrieval-Augmented Generation) pipeline, we pull relevant historical bid data via API and use GPT-4 to generate specific insights for each decision. This might highlight timing patterns ("you typically accept bids in this timeframe") or flag potential issues using natural language, catching red flags that could be worded in multiple ways across different RFPs.

The technical stack is deliberately straightforward: Databricks for data processing and model training, exported via joblib, served through a FastAPI application hosted on Railway.

The Results

The impact was immediate and measurable.